Introduction

The battle between disinformation spreaders, propagandists, and automated social media activity, and the algorithms designed to detect and control them, is an ongoing arms race. While many expect it should be easy to distinguish human behaviour from bots, the reality is much more complex and challenging.

Through my research on forums like BlackHatWorld, I gained insight into how bot farmers and trolls evade detection. Techniques such as hex editing chromedriver to alter browser fingerprints and using device farms for physical and virtual automation at scale illustrate some tactics taken to influence at scale while avoiding detection. These findings led me to explore whether emulating human behaviour with robotics could offer an alternative and cost-effective solution for device automation at scale.

This article details my effort to convincingly and affordably emulate human like operation of a device, rather than just simulating application-level activity. Hiring real humans for such tasks is almost certainly prohibitively expensive (unless you’re Saudi Arabia), so affordable orchestration of human-like activity could facilitate large-scale influence operations or the countering of disinformation without the financial strain of hiring content creators.

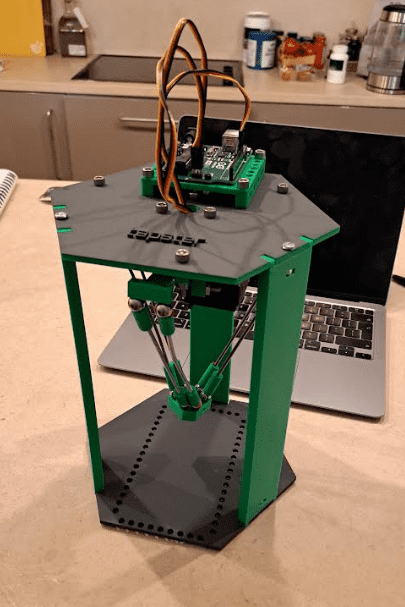

The Tapsterbot: Components and Setup

Tapsterbot is a 3D printable delta arm developed by Jason Huggins, the creator of Selenium. I understand that his current venture, Tapster.io, is a robotics company working on more sophisticated delta arms and other forms of human-like manipulation for app testing which is definitely worth checking out!

The Tapsterbot project’s GitHub page provides the 3D printing files and a Bill of Materials (BOM) needed to build a delta arm for approximately £70 (assuming you have a printer), along with the software required to control it.

I won’t go into too much detail as I’ll be repeating what is available on Github but here are some extra resources I relied on to build the delta arm. 1 2 3

The key components of the Tapsterbot include:

- Motors: Three Hitec HS-311 servo motors, combined with some advanced trigonometric and kinematic mathematics that I don’t fully grasp, allow the delta arm to move effortlessly through three-dimensional space.

- Arduino Microcontroller: The Arduino controls the motors based on input commands, translating them into physical movements of the delta arm.

- 3D Printed Delta Arm: The core structure of the Tapsterbot consists of a top plate suspended above a bottom plate, with an arm made of rods and magnets. A stylus is then inserted into the delta arm ‘hand’.

To control the Tapsterbot’s delta arm, commands must be sent to the Arduino, which then translates them into motor movements. This is done using a Node.js server, the main ‘go’ function in the server takes in a user supplied set of coordinates entered in the terminal, handles the complicated maths and then moves the servos.

go = function(x, y, z, easeType) {

var pointB = [x, y, z];

if (easeType == "none") {

moveServosTo(pointB[0], pointB[1], pointB[2]);

return; // Ensures that it doesn't move twice

} else if (!easeType) {

easeType = defaultEaseType; // If no easeType is specified, go with default (specified in config.js)

}

// motion.move(current, pointB, steps, easeType, delay);

var points = motion.getPoints(current, pointB, steps, easeType);

for (var i = 0; i < points.length; i++) {

setTimeout(function(point) {

moveServosTo(point[0], point[1], point[2]);

}, i * delay, points[i]);

}

}The moveServosTo function calculates the angles for three servos to reach a specified position (x, y, z) by first reflecting and rotating the input coordinates, then applying inverse kinematics to determine the appropriate servo angles. It maps these angles to the specific input-output range of each servo based on their configuration settings, commands the servos to move to these positions, and logs the calculated angles for monitoring purposes.

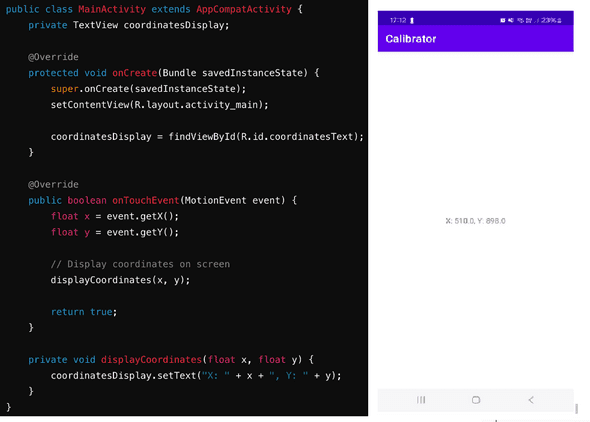

Mapping UI elements to delta arm position

One article was particularly useful in helping map UI elements to the delta arm’s position. It recommended using a mobile app to display the coordinates of a finger or stylus on the screen. This task was relatively simple and printed the coordinates on an Android device when a touch event was detected.

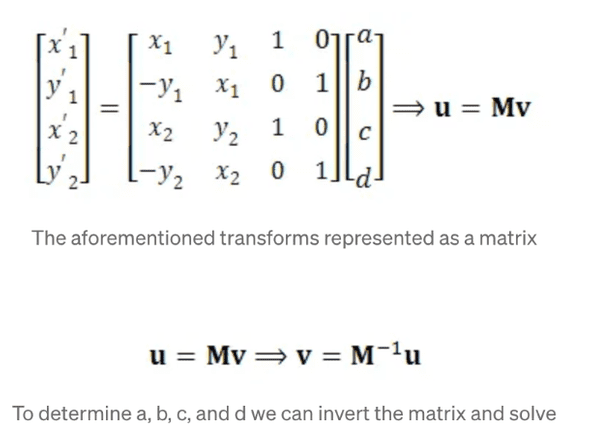

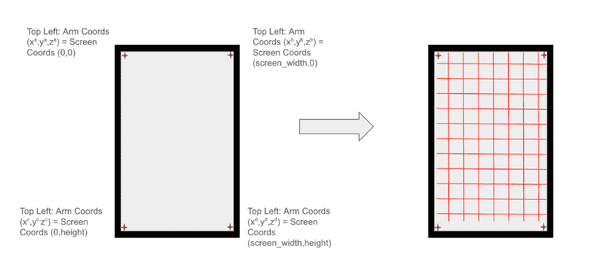

The article describes a calibration routine that involves collecting two points on the screen. Assuming a consistent z-axis (up and down relative to the phone screen), it explains how these points can be used to determine every other coordinate on the screen through two-dimensional transformations.

This approach didn’t work for me. I’m uncertain whether the issue was due to inconsistencies in my Tapsterbot build, such as slightly bent or unevenly sized arms, or whether the mathematics involved were too complex to be reduced to single plane calculation. Regardless, I encountered inaccuracies, particularly at the edges of the screen, which prevented Appium instructions from being accurately translated into the correct delta arm positions.

The next step in addressing the challenges involved a more intricate calibration process. Initially, the corners of the screen’s coordinates were determined manually by moving the delta arm to each extremity.

From there, a more automated approach was developed. Using the node server, the delta arm was programmatically guided to trace a grid pattern across the screen. This grid mapped the relationship between the delta arm’s movements and the corresponding points on the screen.

By connecting to the device via ADB (Android Debug Bridge), the stylus positions were recorded at each point along the grid. This process allowed approximately 100 unique screen positions to be mapped to 100 corresponding delta arm positions.

After the initial grid mapping, a period of experimentation with various algorithms was conducted to identify the most precise way to represent the relationship between the delta arm’s movements and the screen coordinates. The aim was to ensure the delta arm could achieve a high enough level of accuracy to replicate typing actions in Appium using the on-screen keyboard, making these interactions consistent and reliable on the actual device.

Once a suitable algorithm was selected, the next step was to configure Appium to send commands that could convert screen instructions into delta arm positions, enabling the arm to type on the device. I modified Appium to integrate with the delta arm server, adding a command line flag to specify the server’s address.

When this flag is provided, Appium redirects all gesture commands, which would typically go to the automation API, to the robot server through a REST API call. The server then translates these commands into delta arm movements, allowing the robot to perform the actions, such as typing, and reports the results back to Appium. This setup ensured seamless(-ish!) conversion of screen interactions into precise physical gestures executed by the delta arm.

Despite some occasional erratic movements—which were difficult to diagnose and troubleshoot—the system was largely successful. The delta arm could reliably type and interact with a wide range of social media platforms, effectively replicating typical user behaviours such as tapping, scrolling, and typing text. During this project I experimented with the use of a “fire and forget” approach for interacting with the device - sending instrucions without the feedback bridge enabled by ADB. While it is possible to maintain a constant connection between Appium and the device via ADB, allowing real-time feedback on the delta arm’s movements, I suspected that, depending on the application’s permissions, detecting ADB activity could be relatively easy.

Since ADB is often associated with automation or testing environments, certain apps might use this detection as a signal that automation is in play, which could trigger defensive measures, such as limiting functionality or altering the user interface. This would undermine the goal of mimicking natural human interactions undetected.

While this approach was feasible, it became problematic with longer chains of interactions. For instance, when trying to type multiple social media posts or perform extended sequences of actions, the system’s reliability diminished. To maintain accuracy, it was necessary to begin each task from a known starting point, such as restarting the app or resetting the device to a specific screen. This allowed the delta arm to execute a single set of instructions without accumulating errors from previous interactions.

I will hopefully find time for future experimentation, I suspect a solution for handling more complex interactions will involve using two devices. One device would remain connected to ADB, actively browsing social media applications and identifying posts to interact with. This device could leverage a language model (LLM) or similar AI to generate appropriate responses to posts. The second device—isolated from ADB to avoid detection—would be operated by the delta arm, executing the actual posting of the generated content.

This setup could address the limitations of the current “fire and forget” approach by separating the content generation and interaction tasks. The ADB-connected device could handle the intelligence-heavy operations, such as parsing social media content, analysing sentiment, and determining the context for responding. Meanwhile, the non-bridged delta arm device would focus solely on the manual task of typing and posting, (hopefully) maintaining a lower profile by avoiding ADB activity that might be detected by the app. It would also be possible to randomise or replicate the time between button presses or the trajectory of a drag across the screen if these actions were detectable.

In conclusion, while the challenges in developing an affordable system to automate social media interactions, at scale, using delta arms are significant, they don’t feel insurmountable. However, I lack the expertise to determine whether this approach would successfully evade detection over a longer period. Scaling the process to operate with 5, 10, or even 100 delta arms could significantly reduce the barrier to entry for conducting semi-sophisticated influence operations without relying heavily on human labour. This approach could unlock new possibilities for managing information dissemination and efficiently countering disinformation.